AI Summary

The AI community has long misunderstood intelligence, focusing on memorization rather than true adaptive reasoning. Despite massive computational scaling, AI models performed poorly on benchmarks like François Chollet's Abstraction and Reasoning Corpus (ARC), which demands genuine problem-solving in novel situations. A breakthrough in 2024 with "test-time adaptation" allowed models to dynamically learn and adapt, achieving near-human performance on ARC. This signifies a crucial shift from simply scaling pre-training to integrating both intuitive and symbolic reasoning, much like human thought.

For decades, the AI community has been playing the wrong game. We've been teaching machines to memorize and regurgitate, then celebrating when they ace tests that humans designed for other humans. But according to François Chollet, the creator of the Keras deep learning library and one of AI's most thoughtful voices, this approach has led us down a dead end.

Speaking at AI Startup School in San Francisco this June, Chollet delivered a provocative message: the entire field has been confused about what intelligence actually means. And until we fix that fundamental misunderstanding, we'll never build truly intelligent machines.

The $50,000 Scale-Up That Changed Nothing

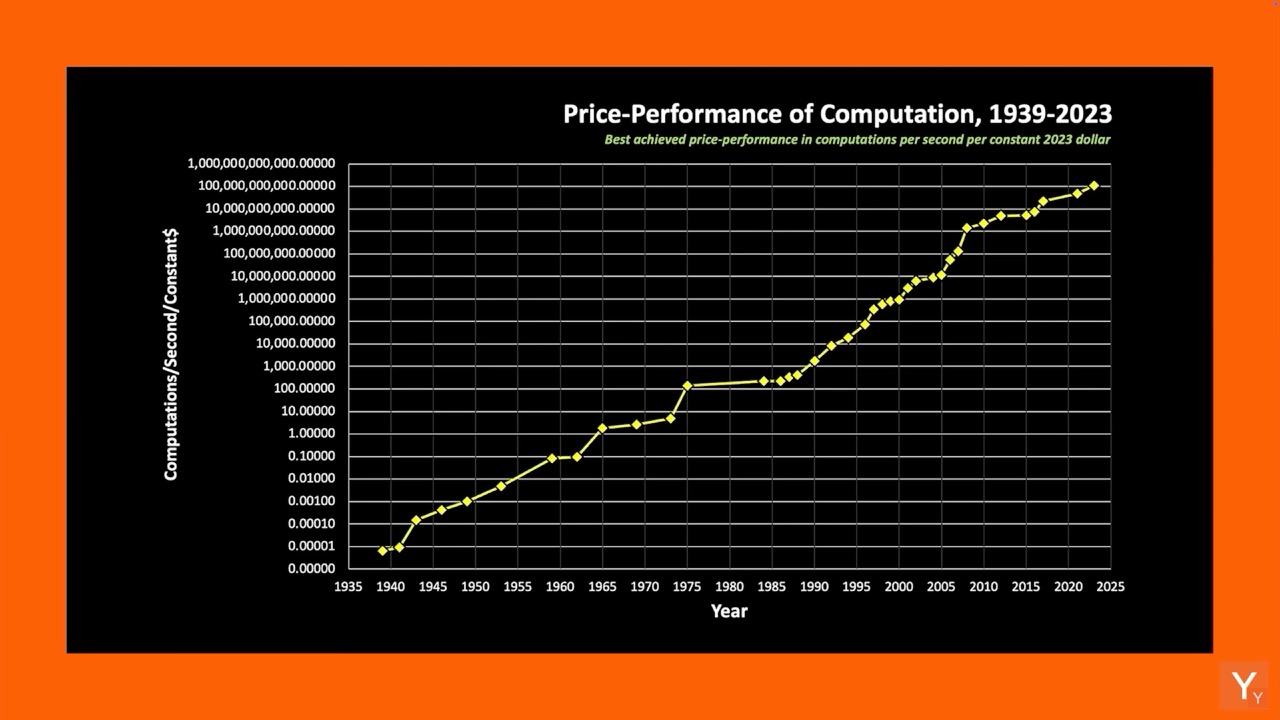

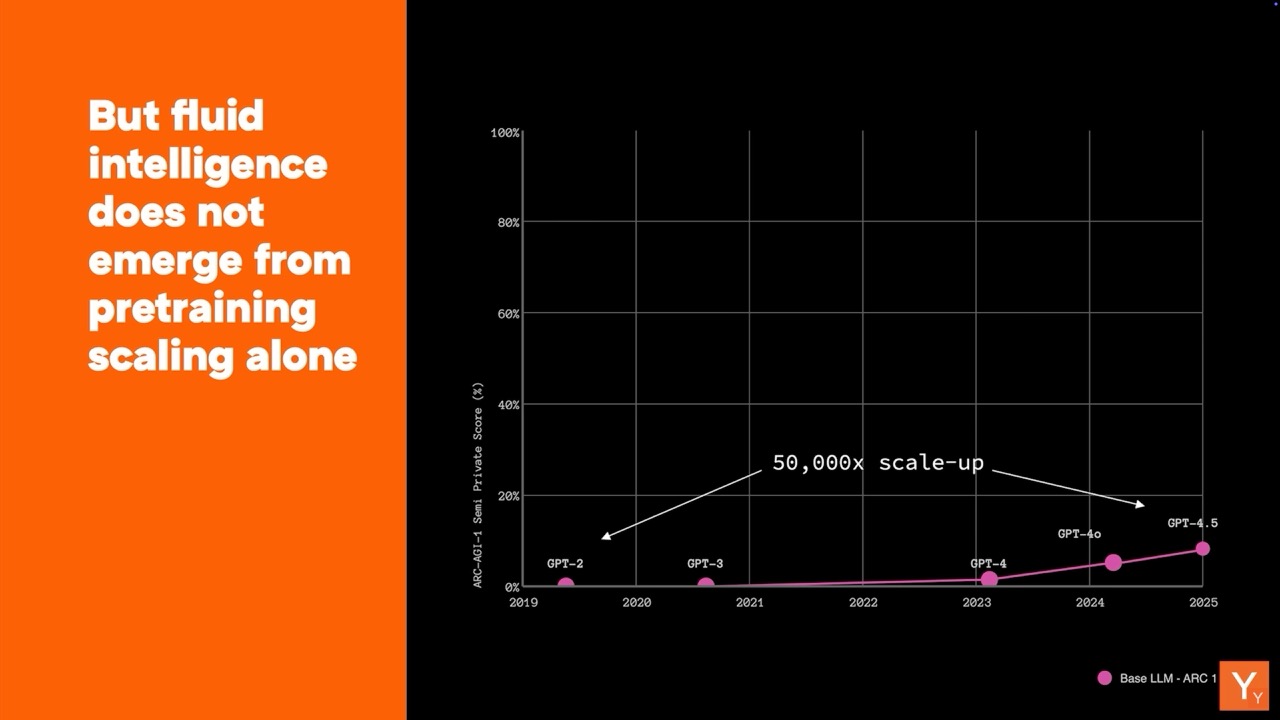

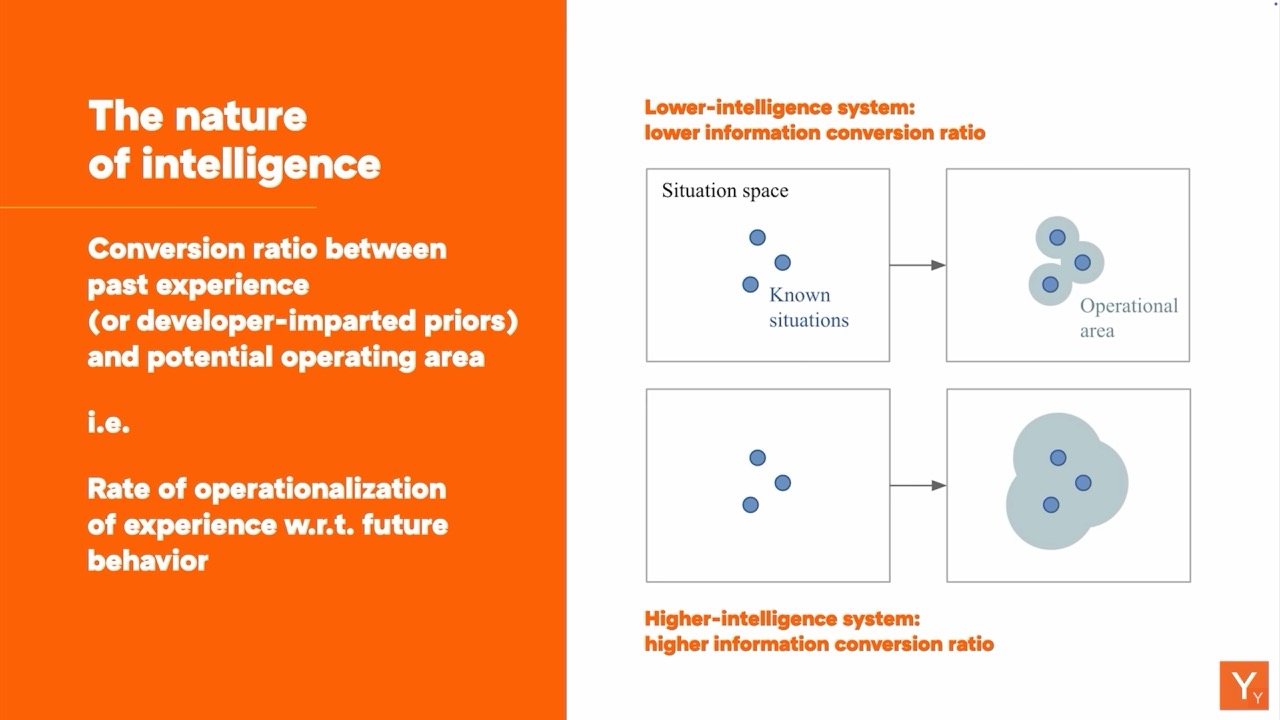

The numbers are staggering. Since 2019, AI models have scaled up by roughly 50,000 times. We've thrown unprecedented amounts of computing power and data at the problem. Yet on Chollet's Abstraction and Reasoning Corpus (ARC) benchmark, these massive models improved from 0% accuracy to just 10%. Meanwhile, any human can easily score above 95%.

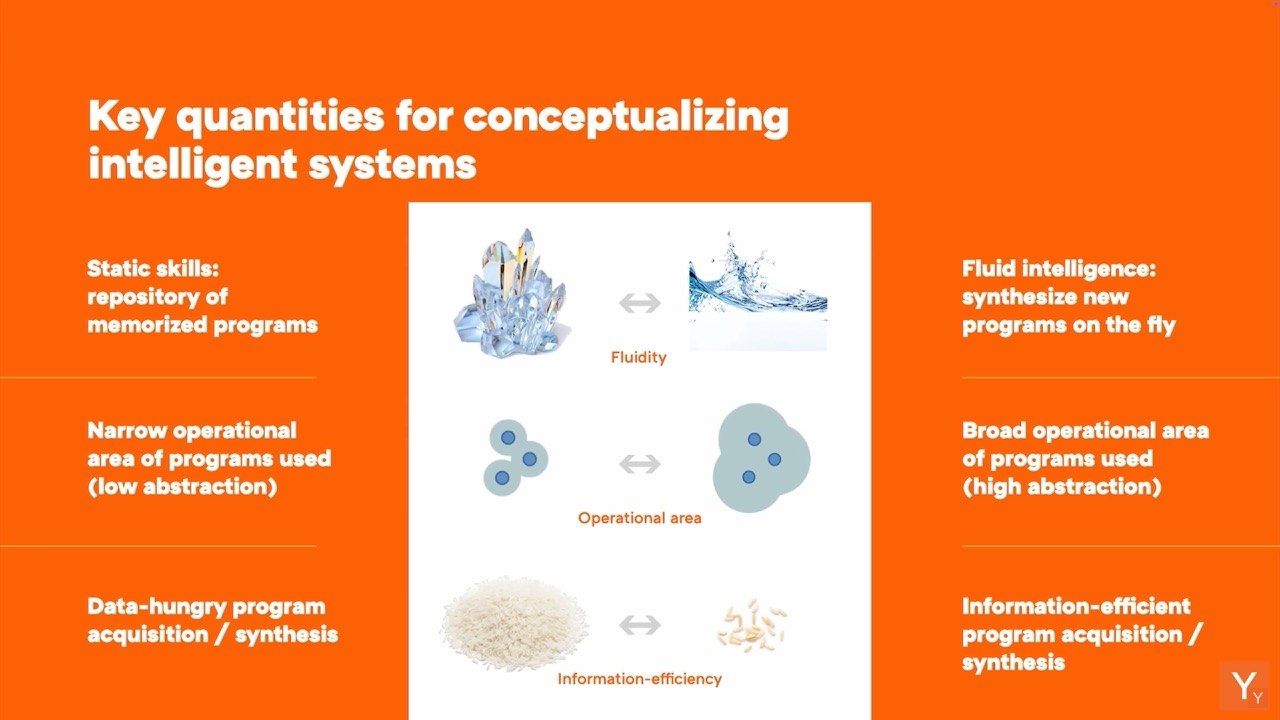

There's a big difference between memorized skills, which are static and task-specific, and fluid general intelligence — the ability to understand something you've never seen before on the fly.

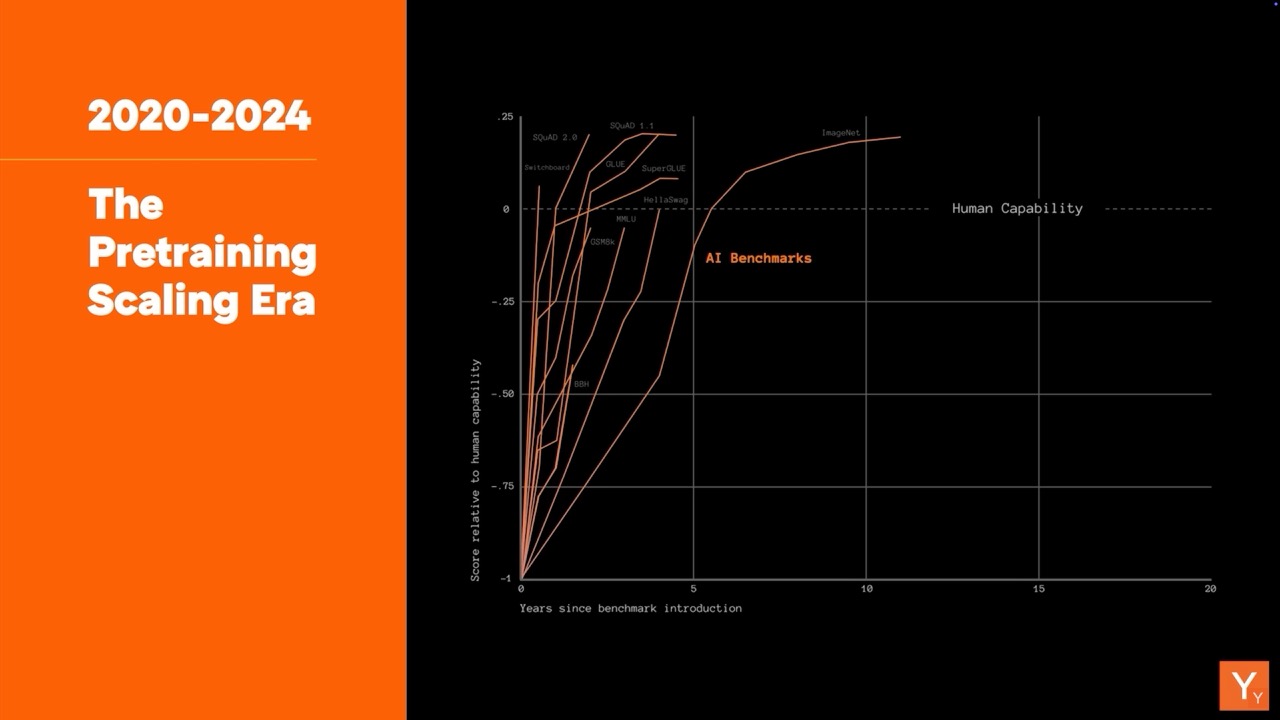

This disconnect reveals a fundamental problem with how we've been thinking about AI progress. For years, the field operated under what Chollet calls the "pre-training scaling paradigm" — the belief that making models bigger and feeding them more data would eventually lead to artificial general intelligence (AGI). It took until OpenAI's o3 model in late 2024, which scored 75.7% on ARC's public benchmark, to prove that a different approach was needed.

The Road Network vs. The Road Building Company

To understand why scaling failed, Chollet offers a compelling analogy. Traditional AI models are like road networks — they can get you from point A to point B, but only for a specific, predefined set of destinations. True intelligence, however, is like having a road-building company. It can connect new places on demand as needs evolve.

Intelligence is the ability to deal with new situations, it's the ability to blaze fresh trails and build new roads. Attributing intelligence to a crystallized behavior program is a category error — you're confusing the process and its output.

This distinction matters more than it might seem. Current AI systems excel at automation, performing known tasks efficiently and at scale. But scientific discovery, the kind of breakthrough thinking that could accelerate human progress, requires something else entirely: the ability to invent solutions to problems we've never encountered before.

The Test That Breaks Every AI System

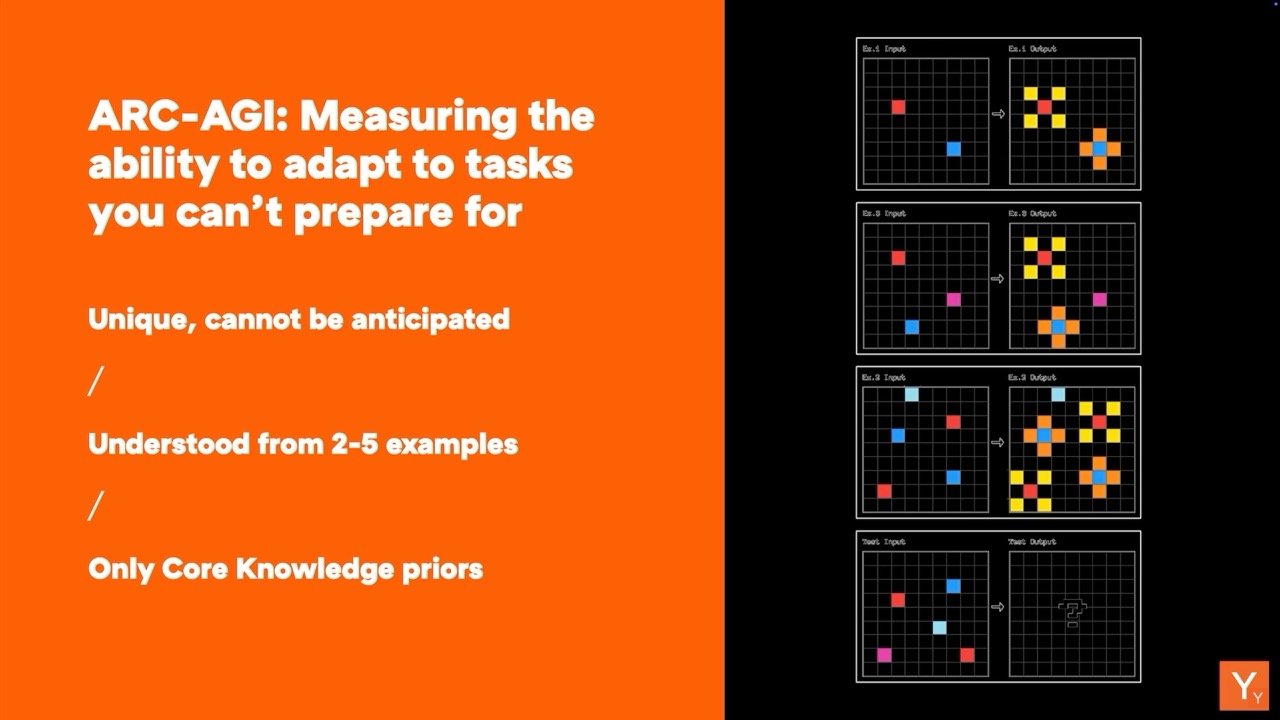

The ARC benchmark, which Chollet released in 2019, was designed to expose this limitation. Unlike traditional AI tests that can be gamed through memorization, ARC presents unique puzzles that require genuine reasoning. Each task involves looking at a few input-output examples and figuring out the underlying pattern to solve a new, never-before-seen variation.

The puzzles look deceptively simple — colorful grids with basic shapes that follow logical rules. But they require what Chollet calls "fluid intelligence": the ability to extract abstract principles from limited examples and apply them to novel situations. It's the kind of thinking a four-year-old can do naturally but that has stumped the most sophisticated AI systems on Earth.

"We recruited random folks — Uber drivers, UCSD students, unemployed people — basically anyone trying to make some money on the side," Chollet said about testing ARC-2, the benchmark's more challenging successor released this year. "All tasks were solved by at least two of the people who saw them. A group of 10 random people with majority voting would score 100%."

Meanwhile, even the most advanced AI models struggle to break single-digit percentages on these same tasks.

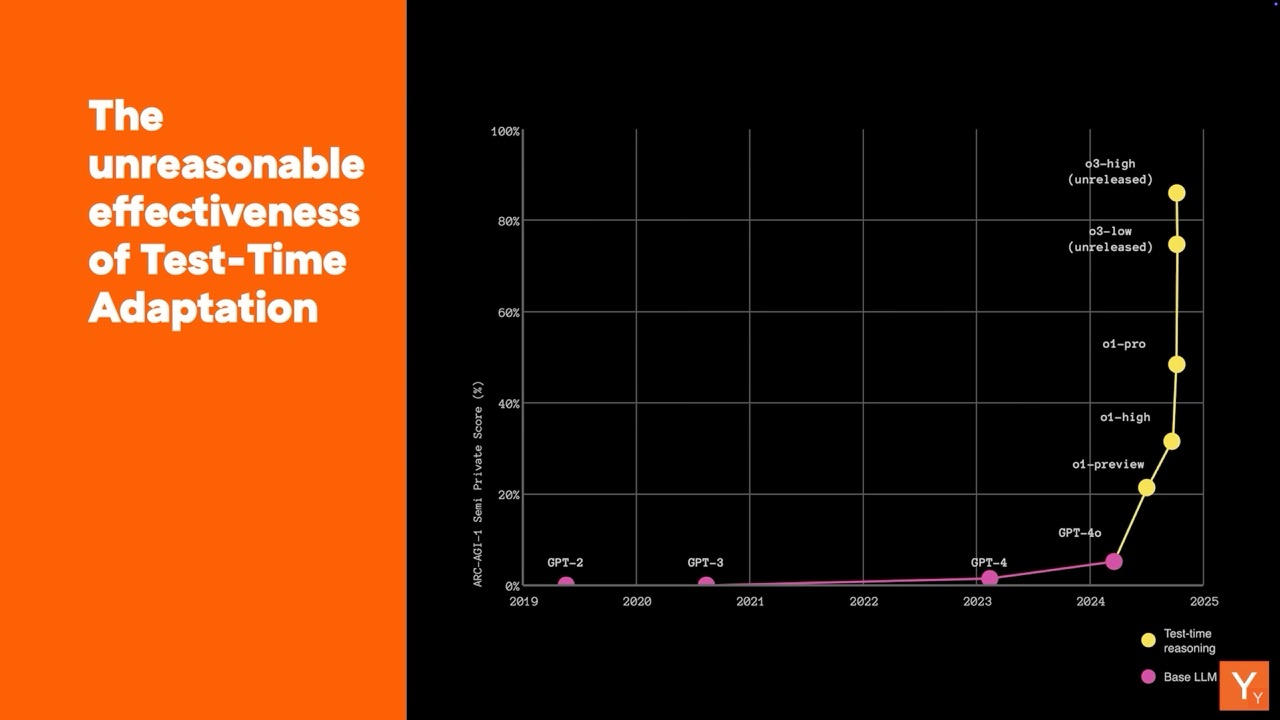

The Breakthrough That Changes Everything

But 2024 marked a turning point. The AI community discovered something called "test-time adaptation" — the ability of models to modify their behavior dynamically when encountering new problems. Instead of just retrieving pre-trained knowledge, these systems can actually learn and adapt during the inference process.

OpenAI's o3 model achieved breakthrough results on ARC-AGI, reaching 87.5% under high-compute conditions — the first time any AI system approached human-level performance on this benchmark. This wasn't achieved through more pre-training data, but through techniques like test-time training and program synthesis, where the model essentially tries to reprogram itself for each specific task.

Today, every single AI approach that performs well on ARC is using one of these techniques. We've suddenly moved from the pre-training scaling pattern to the era of test-time adaptation.

Two Types of Thinking, One Complete Mind

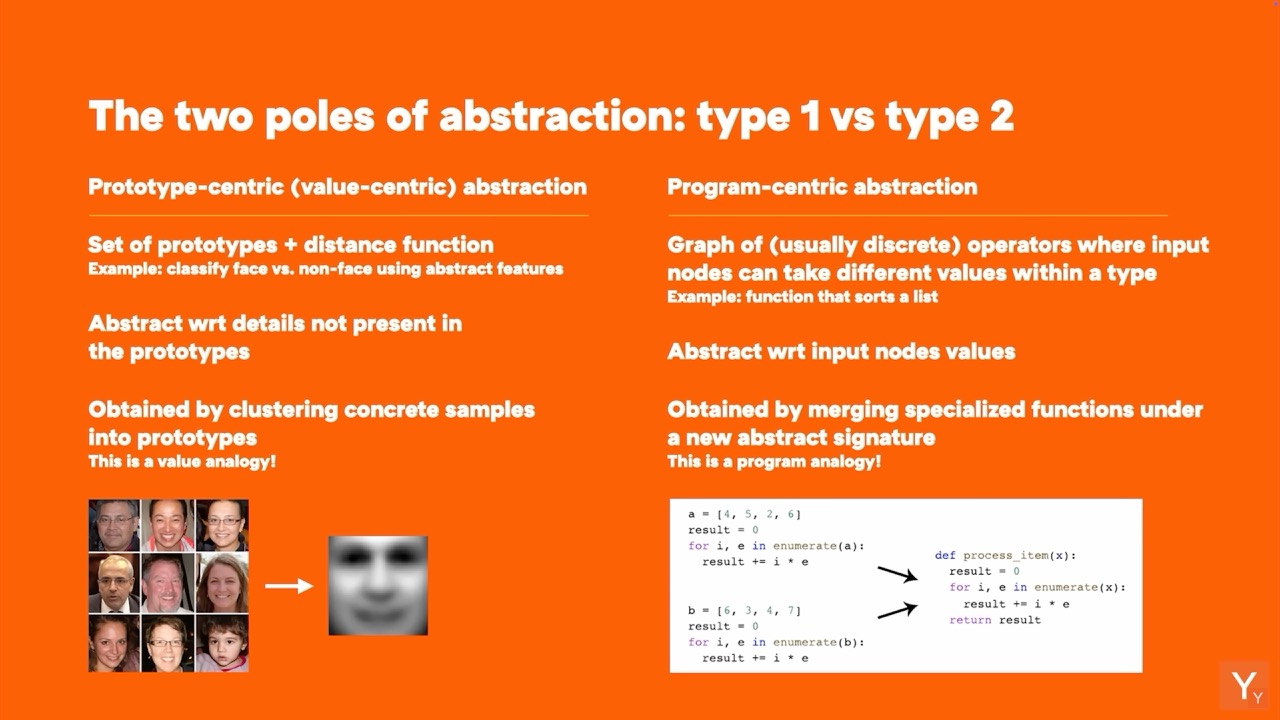

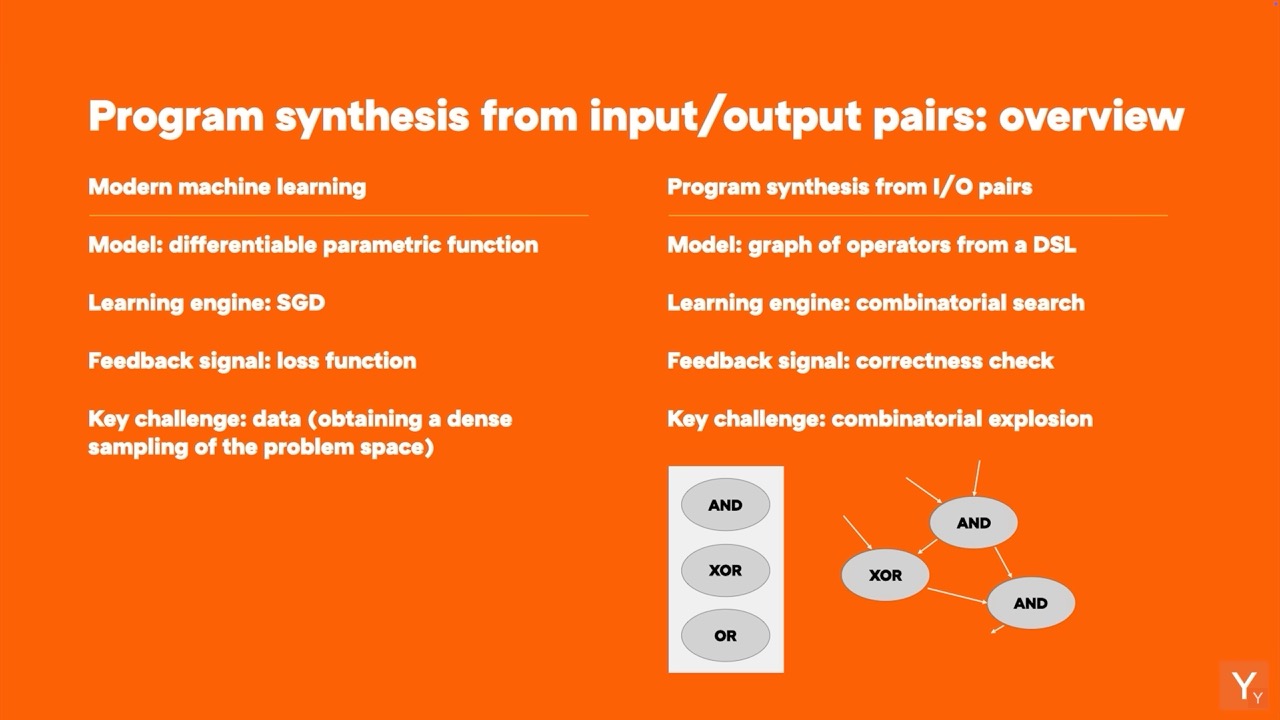

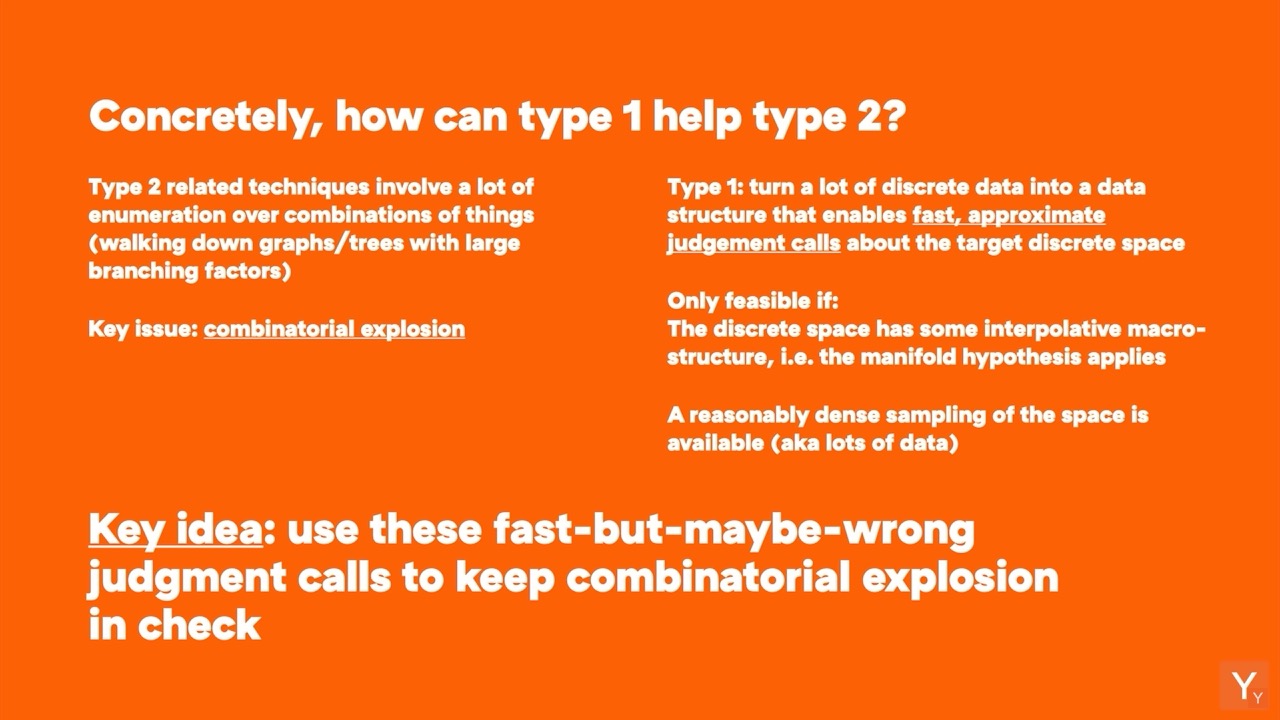

The path forward, according to Chollet, requires understanding that intelligence operates through two complementary processes. Type one abstraction works with continuous patterns — the kind of intuitive pattern recognition that transformers excel at. Type two abstraction deals with discrete, symbolic reasoning — the step-by-step logical thinking that current models struggle with.

Human intelligence seamlessly blends both. When playing chess, for example, you use pattern recognition (type one) to quickly identify promising moves, then use logical calculation (type two) to analyze the most interesting options. You don't calculate every possible move because there are too many; you use intuition to make the search tractable.

I really don't think you're going to go very far if you go all in on just one of them. Human intelligence combines perception and intuition together with explicit step-by-step reasoning. We combine both forms of abstraction in all our thoughts and actions.

The Programmer in the Machine

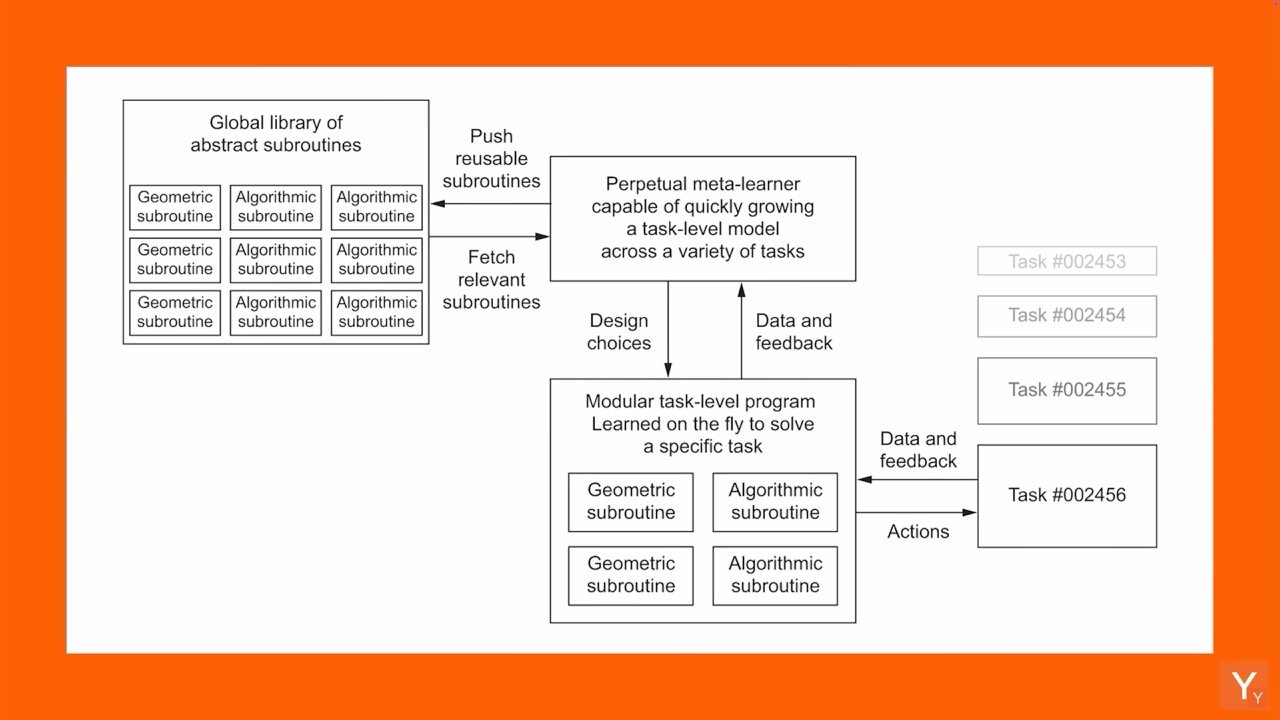

This insight shapes Chollet's vision for the next generation of AI. Instead of monolithic models trying to do everything, he envisions systems that work more like human programmers approaching a new problem. When faced with an unfamiliar task, a skilled programmer doesn't start from scratch — they draw from a library of existing tools and techniques, combining them in novel ways to create a solution.

"AI is going to move towards systems that are more like programmers that approach a new task by writing software for it," Chollet predicted. These systems would maintain a constantly evolving library of reusable abstractions, much like how software engineers contribute useful libraries to platforms like GitHub for others to build upon.

The programmer-like AI would blend deep learning modules for pattern recognition with algorithmic modules for logical reasoning, guided by learned intuitions about which combinations might work for specific problems. This approach could potentially overcome the combinatorial explosion that makes pure program search intractable while avoiding the data hunger that makes pure deep learning inefficient.

The Lab That's Building Tomorrow's AI

Chollet isn't just theorizing about these ideas — he's building them. His new research lab, called NDEA, focuses specifically on creating AI capable of independent scientific discovery. The goal isn't just automation of known processes, but genuine invention of new solutions to previously unsolved problems.

"We started NDEA because we believe that to dramatically accelerate scientific progress, we need AI that's capable of independent invention and discovery," Chollet explained. "We need AI that could expand the frontiers of knowledge, not just operate within them."

Their first milestone is ambitious: solve ARC using a system that starts knowing nothing about the benchmark. If successful, it would demonstrate an AI that can acquire new reasoning capabilities purely through interaction with novel problems — a crucial step toward systems that could tackle humanity's greatest scientific challenges.

The Road Ahead

Despite recent progress, Chollet believes we're still far from true AGI. Even OpenAI's impressive o3 model would likely score under 30% on upcoming ARC successors, while humans would still achieve over 95%. The efficiency gap remains enormous — current test-time adaptation techniques require thousands of dollars of compute to achieve human-level performance on individual problems.

But the direction is clear. The field has moved away from the naive belief that more data and bigger models alone will solve intelligence. Instead, researchers are exploring how to build systems that can truly adapt, reason about novel situations, and synthesize new solutions from fundamental building blocks.

You will know we are close to having AGI when it becomes increasingly difficult to come up with tasks that any one of you can do easily but AI cannot figure out. We're clearly not there yet.

Recent Posts