AI Summary

At Google I/O 2025, Google leaders discussed how AI, particularly the multimodal Gemini model, is revolutionizing how we find and interact with information. They highlighted the transformation of Google Search into an intelligent research assistant capable of handling complex, multi-step queries and bridging language barriers. The leaders also touched on the delicate balance between speed and quality in AI-powered search, the rise of AI agents that can utilize tools and perform actions, and Google's vision for a personal, proactive, and powerful universal AI assistant.

At

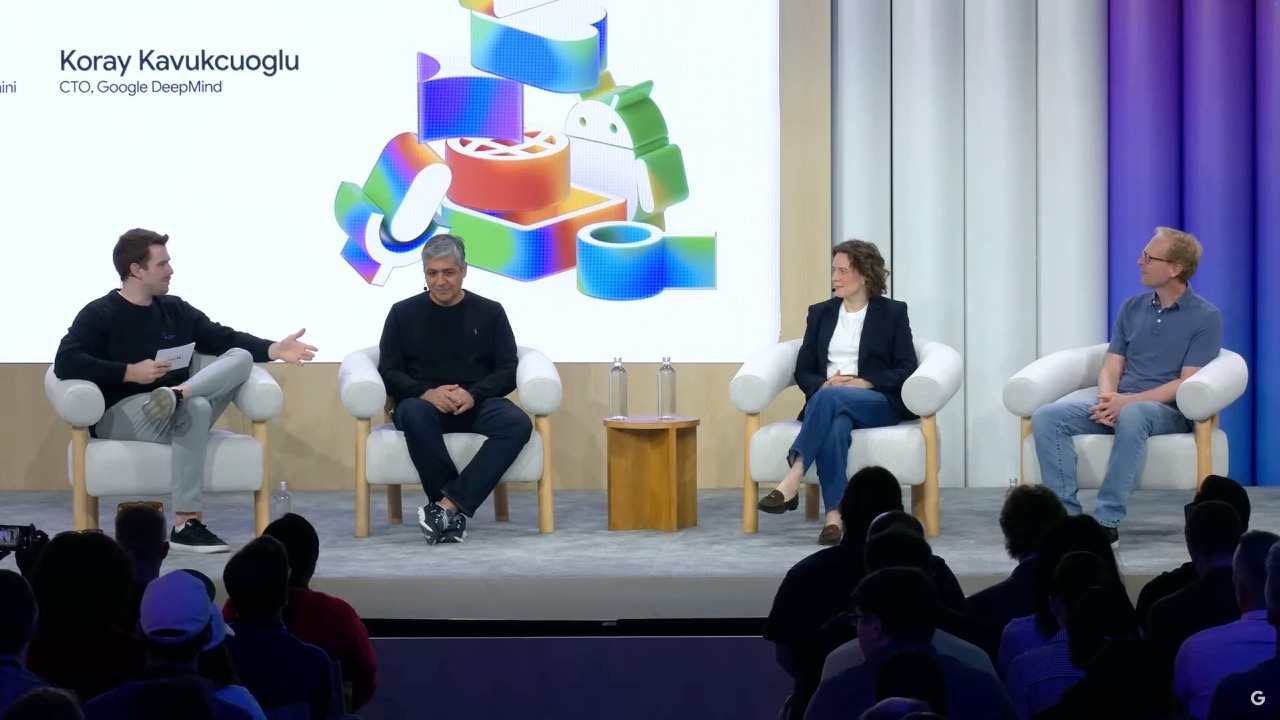

Google I/O 2025, three of the company's most influential leaders sat down for a candid conversation that revealed the dramatic transformation happening behind the scenes. DeepMind CTO Koray Kavukcuoglu, Search VP Liz Reid, and Google Labs director Josh Woodward pulled back the curtain on how artificial intelligence is fundamentally changing the way we find and interact with information.

Their discussion, captured in a rare behind-the-scenes dialogue, offers a glimpse into Google's ambitious vision: transforming search from a simple information retrieval tool into an intelligent research assistant that can handle complex, multi-step queries in ways that seemed impossible just years ago.

The Multimodal Foundation: Why Everything Started With Gemini

The conversation began with a fundamental question about Google's approach to artificial general intelligence (AGI). Kavukcuoglu explained that Gemini was designed from the ground up to be "natively multimodal" because the team believes this is the path to AGI.

When we say multimodality, sometimes we think about just video, audio, text, but it's also more than that. Actions are important too. Tool calls, function calls—these are natural for agent models to do.

Instead of building separate models for different tasks and trying to connect them, Google built one model that can naturally understand and generate different types of content while also knowing when and how to use external tools.

Kavukcuoglu pointed to voice coding as an example: "You can speak to the model and say 'I want an application that can do this and this,' and by the way, here's a little sketch, and I want it to look like that, and then the model can generate that."

Search Gets Smarter: From Keywords to Conversations

Perhaps nowhere is this transformation more evident than in Google Search itself. Reid, who has been at Google for 22 years and witnessed the evolution of search from its earliest days, described how AI is solving one of search's longest-standing limitations.

For a long time, your ability to answer a question was limited based on whether or not the answer was all in one place. If you had a multi-part question with five constraints, you had to break it up into each constraint.

The new AI-powered search changes this completely. Using what Google calls the "query fan-out technique," the system can take a complex question like planning a camping trip with specific family members and pets, automatically break it down into sub-queries, search for relevant information across the web, and synthesize a comprehensive answer.

But the benefits extend far beyond convenience for English-speaking users. Reid highlighted how this technology is democratizing access to information globally:

Previously, your access to information was partly limited based on the language you spoke. Now with the models, you can ask the question in your language and we can find the content in another language and transform it back in a way you understand.

The Speed vs Quality Balancing Act

Rolling out AI-powered search to billions of users isn't just a technical challenge, it's a delicate balancing act between speed and quality. Reid shared insights into the meticulous process behind yesterday's rollout of AI mode to all US users.

There were multiple times where we wanted to roll out a bigger model on a slice of queries, but we didn't pick the right slice because it was a better response, but the amount of additional time it took wasn't worth the added value to the user.

The team discovered that user expectations for response time vary dramatically based on the complexity of their query. Some users will wait five seconds for something that would normally take them 30 minutes to research, but for simpler queries, even 1.2 seconds can be too long. This led to the development of different search experiences:

- AI Overviews: Fast responses for everyday queries integrated into main search results

- AI Mode: More advanced thinking and reasoning for complex questions

- Deep Search: Coming soon for tasks where users are willing to wait several minutes for expert-level analysis

The Rise of AI Agents: When Models Become Tools

One of the most significant developments discussed was Gemini 2.0's native integration with tools, particularly search. This represents a shift from AI models that simply generate text to systems that can actively use tools to accomplish tasks.

A big part of our daily lives is we use a lot of tools, we build a lot of tools, and then we use those tools. There's a world in which the models should create their own tools as well.

The integration with search is particularly significant because it gives the AI access to fresh, factual information from the web in real-time. But this is just the beginning. The team revealed that they're working toward a future where models can perform UI actions on computers by default through new action APIs.

Woodward from Google Labs provided a concrete example of how this evolution is already happening. When his team first built audio overviews for NotebookLM, it required an 11-step agentic system. Now, much of that complexity has been compressed into the model itself, and native audio generation capabilities have made the process dramatically simpler.

Building the Universal Assistant: Personal, Proactive, and Powerful

The conversation revealed Google's ambitious vision for the Gemini app: becoming a "truly universal AI assistant" that is personal, proactive, and powerful—what the team calls "the three Ps."

Personal means the assistant can use as much information as you choose to give it across Google's services. Proactive represents a shift from today's largely reactive AI systems to ones that can anticipate your needs and bring relevant information at the right moment. Powerful refers to the integration of advanced capabilities like video, imagery, and coding in a single assistant.

The Content Revolution: Everything Becomes Remixable

Perhaps one of the most profound changes discussed was what Woodward called "infinitely remixable content." This concept suggests that the format of information is becoming fluid—documents can become podcasts, images and videos can become action plans, and any form of content can be transformed into whatever format best serves the user's needs.

This capability is already visible in Google's audio overviews feature, which can take any collection of content and transform it into an engaging two-host podcast discussion. The feature has proven so successful that it's now spreading across Google's products, from search to the Gemini app to cloud services for developers.

What This Means for Developers and Businesses

Reid emphasized the importance of agility: "Be very agile, be constantly playing with the tech, and don't give up. There's a lot of things in search where the first time it didn't work, and the second time it didn't work, and the third time, and then suddenly it worked."

The pace of change means that capabilities that didn't exist in one model version might be available in the next. This requires a different approach to product development—one that involves constant experimentation and adaptation rather than long-term commitments to specific technical approaches.

Kavukcuoglu provided insight into how Google evaluates AI model performance, using three main categories:

- Academic evaluations: Standardized benchmarks that provide calibrated measures of model capacity

- Public leaderboards: Real users testing real prompts on platforms like LMSYS

- Product integration: Feedback from actual deployment in products like search and the Gemini app

But Reid offered an important warning about over-relying on metrics: "A lighthouse is really good at getting you close to the island until you get too close to the island, and then if you keep going towards the lighthouse, you crash into it. The evals are always proxies for what you're really trying to build."

The Road Ahead: From Information to Intelligence

When asked to describe the future of search in just a few words, Reid's response was telling: "From information to intelligence." This encapsulates the fundamental shift happening across Google's AI initiatives—moving from systems that simply retrieve and display information to ones that can reason, synthesize, and act on that information.

The implications are far-reaching. We're moving toward a world where the distinction between search engines, personal assistants, and productivity tools begins to blur. Instead of adapting our behavior to work with technology's limitations, technology is increasingly adapting to work the way we naturally think and communicate.

For consumers, this means more intuitive interactions with technology and access to capabilities that were previously available only to experts. For businesses, it represents both an opportunity to build new types of products and a challenge to stay relevant as AI systems become more capable.

Google's AI developments represent more than incremental improvements to existing products. They signal a fundamental shift toward intelligent systems that can understand context, anticipate needs, and seamlessly integrate multiple types of information and capabilities. For users, developers, and businesses alike, the message is clear: the future belongs to those who can adapt to and leverage these rapidly evolving AI capabilities.

Recent Posts