AI Summary

Devstral is a new open-source AI model that runs locally and excels at solving complex software engineering problems, outperforming many cloud-based alternatives like GPT-4 on real-world GitHub issues. Unlike typical code completion tools, Devstral is designed for tasks like understanding large codebases and debugging, mimicking a human developer's "agentic" behavior. Its local deployment capability offers significant advantages in privacy, cost control, and offline functionality, making it particularly appealing for sensitive enterprise projects.

While most developers have been relying on cloud-based coding assistants like GitHub Copilot or ChatGPT, a new player has emerged that challenges everything we thought we knew about AI coding tools. Meet

Devstral, an open-source AI model that not only runs entirely on your local machine but actually outperforms many industry-leading closed-source alternatives at solving real software engineering problems.

What makes this particularly striking is that Devstral isn't just another code completion tool. It's designed to tackle the messy, complex reality of software engineering: understanding large codebases, identifying relationships between disparate components, and solving actual GitHub issues that have stumped human developers.

The Problem with Current AI Coding Tools

Anyone who has worked extensively with current AI coding assistants knows their limitations. They excel at writing standalone functions or completing code snippets, but they struggle with the kind of work that occupies most of a developer's time: understanding how different parts of a system interact, debugging complex issues across multiple files, and making changes that require deep contextual understanding of an entire codebase.

This gap between AI capabilities and real-world software engineering needs has been frustrating for developers and companies alike. Many organizations have been hesitant to rely heavily on AI coding tools for sensitive or complex projects, partly because the tools simply weren't sophisticated enough to handle the intricacies of production software development.

Enter Devstral: A Different Approach

Devstral represents a fundamentally different approach to AI-powered software development. Created through a collaboration between European AI company Mistral AI and

All Hands AI, it's specifically trained to solve real GitHub issues using what researchers call "agentic" behavior.

Unlike traditional coding AI that simply generates code based on prompts, Devstral operates more like a human developer would. When given a software engineering task, it can explore codebases, read documentation, examine existing files, make targeted edits, and run tests to verify its solutions. This approach mirrors how experienced developers actually work when tackling unfamiliar code.

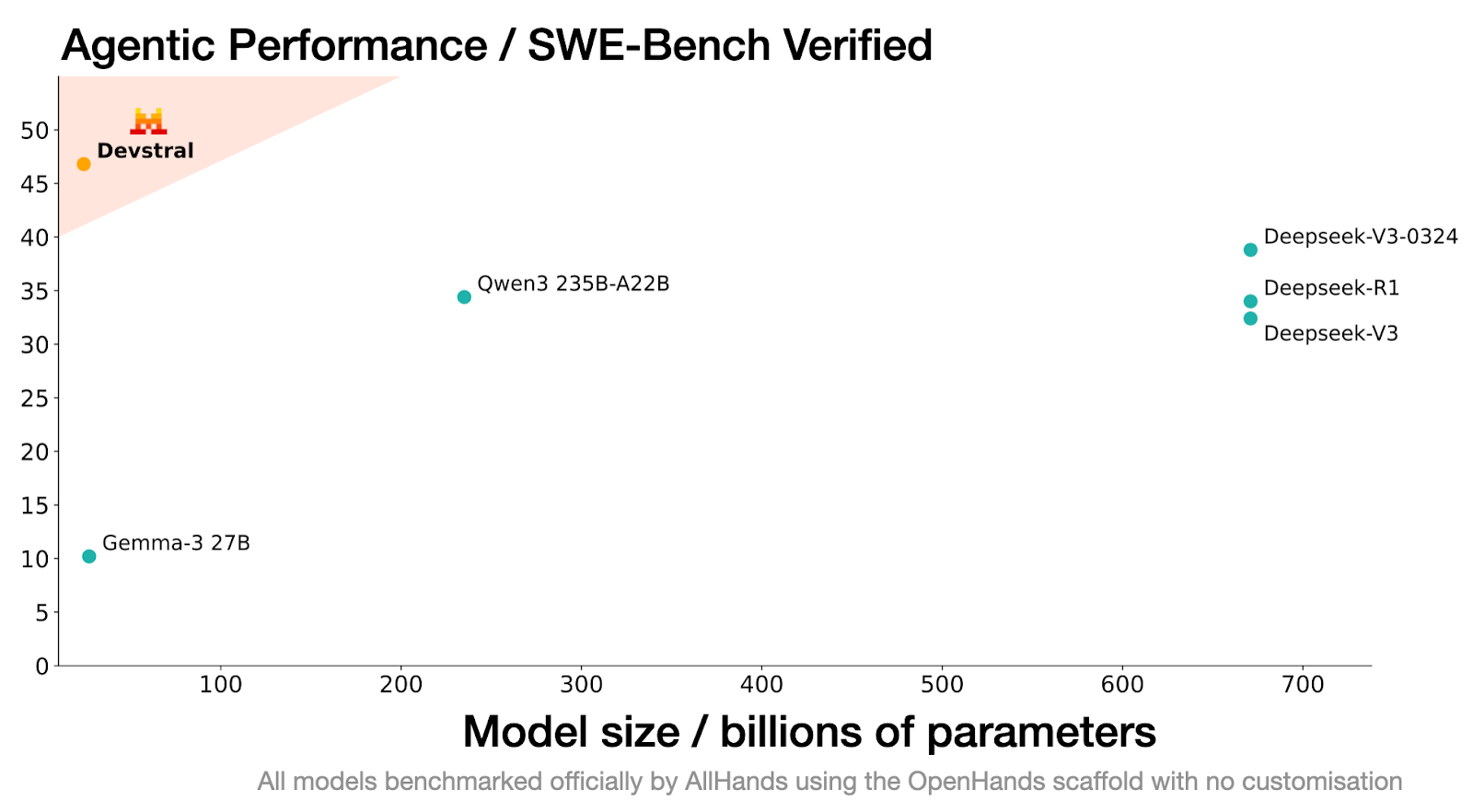

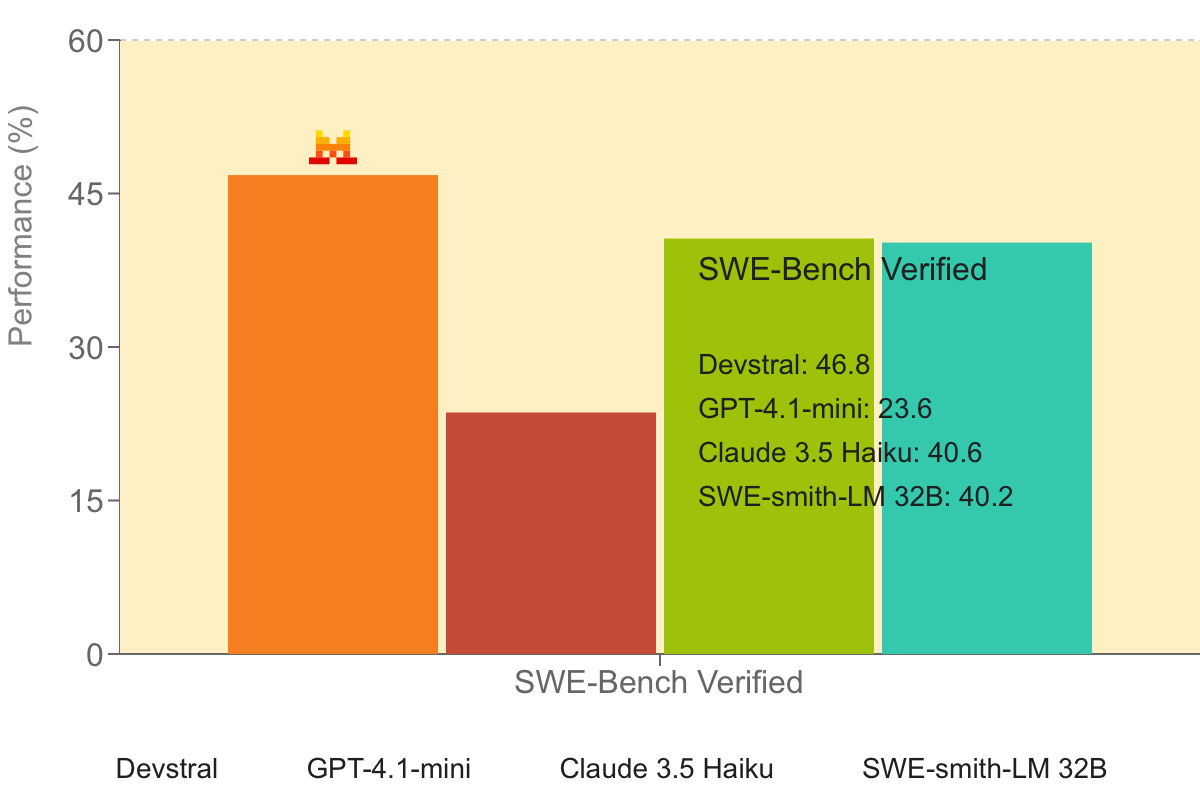

The results speak for themselves. On the SWE-Bench Verified benchmark, a rigorous test using 500 manually screened real-world GitHub issues, Devstral achieved a 46.8% success rate. To put this in perspective, that's more than 20 percentage points higher than GPT-4.1-mini and represents a significant leap over previous open-source models.

The Local LLM Model Advantage

Perhaps the most intriguing aspect of Devstral is that it runs entirely on local hardware. The model requires either a single RTX 4090 graphics card or a Mac with 32GB of RAM. While this might sound like expensive hardware, it's actually quite accessible compared to the typical requirements for running large language models locally. As one developer on

Hacker News noted:

The first number I look at these days is the file size via Ollama, which for this model is 14GB. I find that on my M2 Mac that number is a rough approximation to how much memory the model needs, which matters because I want to know how much RAM I will have left for running other applications.

This local deployment capability addresses several pain points that developers have with cloud-based AI tools:

- Privacy and Security: Code never leaves your machine, making it suitable for proprietary or sensitive projects

- Cost Control: No per-token charges or monthly subscriptions

- Offline Capability: Works without internet connectivity

- Latency: No network delays for processing requests

Real-World Performance and Developer Reactions

The developer community's initial reactions to Devstral have been cautiously optimistic but notably practical. Unlike the hype cycles that often surround new AI releases, discussions about Devstral have focused on concrete technical details and real-world usability.

One developer reported their experience: "I used devstral today with cline and open hands. Worked great in both. About 1 minute initial prompt processing time on an m4 max." Another noted, "I've just ran the model locally and it's making a good impression. I've tested it with some ruby/rspec gotchas, which it handled nicely."

However, the community has also been realistic about limitations. For hardware without high-end graphics cards, performance can be significantly slower. One user with an 8GB graphics card reported generation speeds of around 6 tokens per second, noting that "you'd probably want to pay for an API for anything using a large context window that is time sensitive."

The Open Source Advantage

Devstral's release under the Apache 2.0 license represents a significant shift in the AI development landscape. Unlike many "open-weight" models that come with usage restrictions, Apache 2.0 provides the legal clarity that enterprises need for commercial deployment.

As one commenter on Hacker News explained, "It's not about ethics, it's about legal risks. What if you want to fine tune a model on output related to your usage? Then my understanding is that all these derivatives need to be under the same license." This licensing approach makes Devstral particularly attractive for companies that need to customize AI models for their specific use cases.

The open-source nature also means that developers can examine, modify, and improve the model without the black-box limitations of proprietary alternatives. This transparency is crucial for understanding how the AI makes decisions and ensuring it behaves appropriably in production environments.

Technical Architecture and Capabilities

Devstral is built on a 22-billion parameter architecture, making it significantly smaller than some competing models while delivering superior performance on coding tasks. This efficiency is partly due to its specialized training regimen, which focused specifically on software engineering workflows rather than general language tasks.

The model excels at several key areas that traditional coding AI struggles with:

- Codebase Exploration: Understanding the structure and relationships within large software projects

- Context-Aware Editing: Making changes that consider the broader impact across multiple files

- Tool Integration: Working with development tools, testing frameworks, and version control systems

- Issue Resolution: Systematically approaching and solving complex bugs or feature requests

Integration and Ecosystem

Devstral works best when integrated with agentic coding platforms like

OpenHands (formerly OpenDevin) or similar frameworks. These platforms provide the scaffolding that allows the AI to interact with codebases, run commands, and execute the kind of multi-step workflows that characterize real software development. The model is already available through several distribution channels:

- Ollama: For easy local deployment and management

- LM Studio: With a user-friendly interface for non-technical users

- HuggingFace: For researchers and developers who want direct access to the model weights

- Mistral API: For those who prefer cloud deployment at $0.1 per million input tokens

The release of Devstral has significant implications for enterprise software development. Many companies have been hesitant to adopt AI coding tools due to security concerns about sending proprietary code to external services. A model that can run entirely on-premises while delivering competitive performance addresses this concern directly.

Limitations and Considerations

Performance varies considerably based on hardware configuration. Users with less capable systems report slower generation speeds that may not be practical for interactive development workflows. Additionally, while the model excels at certain types of software engineering tasks, it may not be as strong at general programming questions or educational use cases compared to more broadly trained models.

There's also the question of ongoing development and support. While open-source models benefit from community contributions, they may not receive the same level of consistent updates and improvements as commercial alternatives backed by large corporations.

Devstral represents more than just another AI model release; it signals a maturation of the open-source AI ecosystem and a recognition that different use cases require specialized approaches. The focus on real-world software engineering tasks, combined with local deployment capabilities and permissive licensing, addresses genuine pain points that developers and organizations have experienced with existing AI coding tools.

Hugging Face:

https://huggingface.co/mistralai/Devstral-Small-2505Ollama:

https://ollama.com/library/devstralKaggle:

https://www.kaggle.com/models/mistral-ai/devstral-small-2505Unsloth:

https://docs.unsloth.ai/basics/devstralLM Studio:

https://lmstudio.ai/model/devstral-small-2505-MLXRecent Posts