AI Summary

Microsoft introduced Phi-4 reasoning models: Phi-4-reasoning (14B), Phi-4-reasoning-plus (enhanced with RL), and Phi-4-mini-reasoning (3.8B). Unlike standard models, these are specifically trained for complex multi-step problem-solving and analysis, bringing capabilities previously exclusive to massive models to a much smaller scale through methods like fine-tuning on curated data and synthetic reasoning traces. Phi-4-reasoning-plus achieving results comparable to models vastly bigger on math benchmarks.

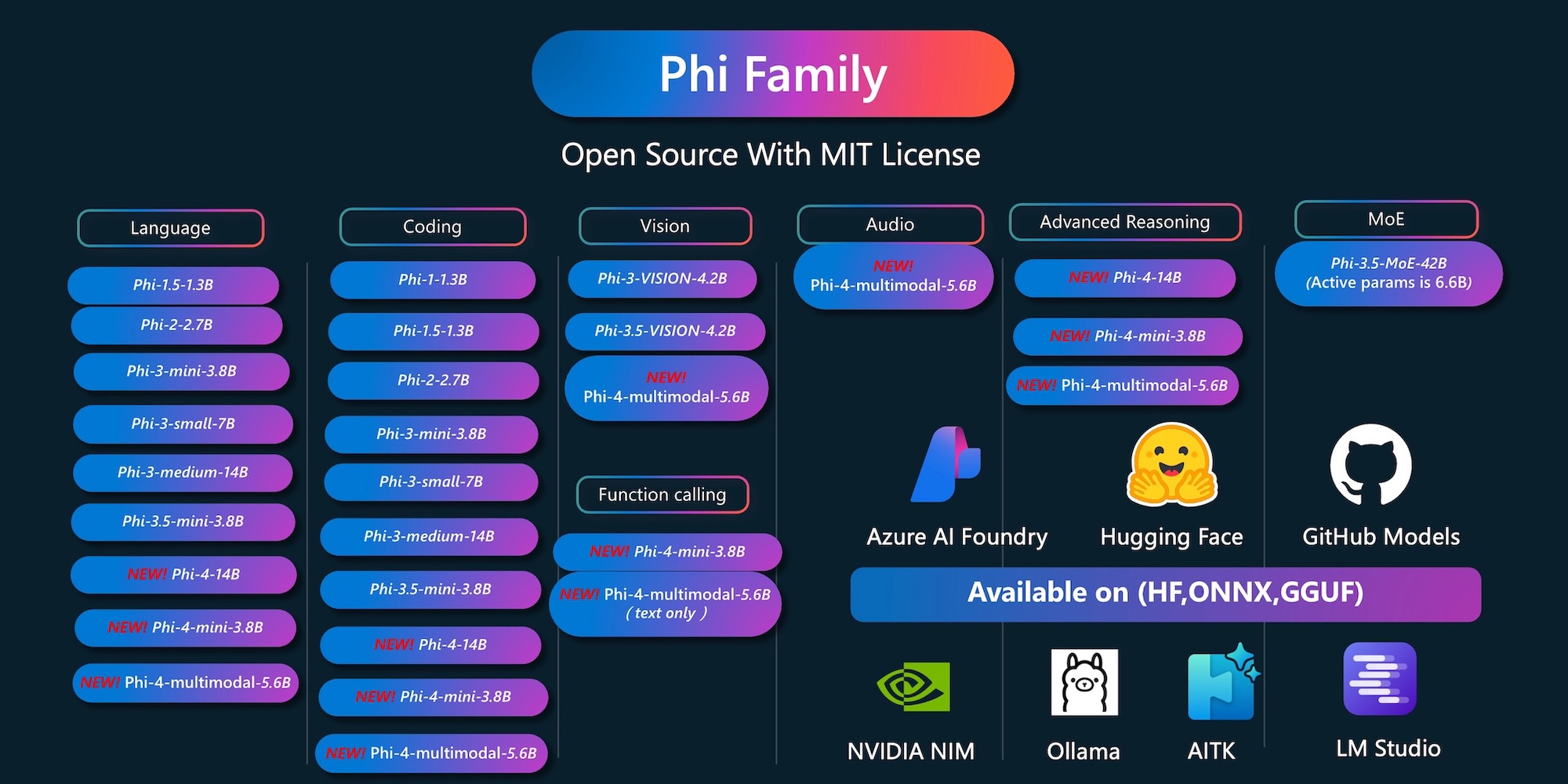

Last month, Microsoft

added two new models to the Phi-4 series, includes the Phi-4-mini-instruct (3.8B) and Phi-4-multimodal (5.6B) models, which offer compact AI with powerful capabilities despite reduced size.

Microsoft just unveiled their newest innovations: Phi-4-reasoning, Phi-4-reasoning-plus, and Phi-4-mini-reasoning—marking what they're calling "a new era for small language models." But what makes these models special, and why should developers, businesses, and tech enthusiasts care about these developments?

What Are Reasoning Models and Why Do They Matter?

Unlike standard language models that primarily predict the next word based on patterns in training data, reasoning models are specifically trained to leverage inference-time scaling for complex tasks requiring multi-step problem solving and internal reflection. These models excel at:

- Mathematical reasoning and complex calculations

- Multi-step problem decomposition

- Scientific analysis and evaluation

- Serving as the backbone for agentic applications with complex, multi-faceted tasks

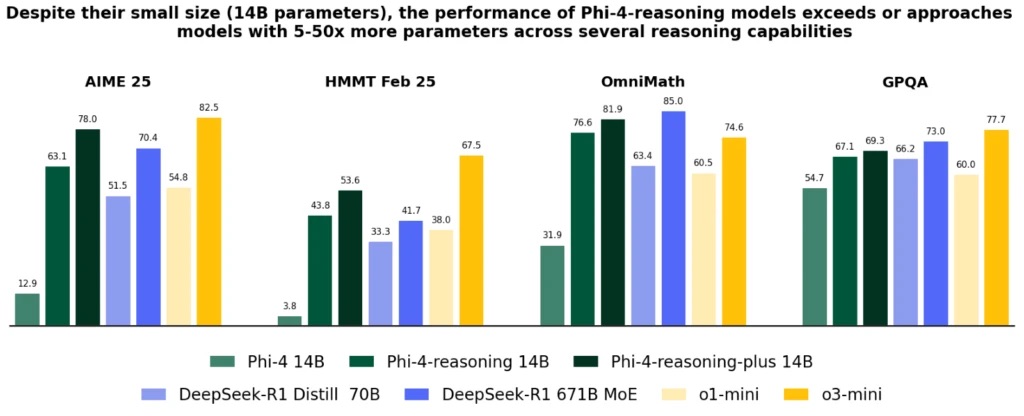

What makes Microsoft's announcement particularly notable is that such reasoning capabilities have typically been reserved for massive frontier models with hundreds of billions of parameters. Phi-4 is changing that paradigm.

Phi-4-reasoning: The 14B Parameter Powerhouse

The flagship model in this new lineup is Phi-4-reasoning, a 14-billion parameter open-weight reasoning model that somehow manages to rival much larger models on complex reasoning tasks. To put this in perspective, it's outperforming models that are 5x larger and competing with some that are nearly 50x its size.

How did Microsoft achieve this? The technical approach combines several key elements:

- Supervised fine-tuning of the base Phi-4 model

- Carefully curated "teachable" prompts selected for optimal complexity and diversity

- High-quality synthetic datasets with reasoning demonstrations

- Training on over 1.4 million prompts with detailed reasoning traces

The Phi-4-reasoning model generates detailed reasoning chains that effectively leverage additional inference-time compute—meaning it can think through complex problems step by step, showing its work along the way.

Phi-4-reasoning-plus: The RL-Enhanced Version

Building on the foundation of Phi-4-reasoning, Microsoft developed Phi-4-reasoning-plus—a variant enhanced through a short phase of outcome-based reinforcement learning. This model utilizes about 1.5x more tokens than Phi-4-reasoning, effectively spending more "thinking time" on problems to deliver higher accuracy.

The results speak for themselves. On the AIME 2025 test (the qualifier for the USA Math Olympiad), Phi-4-reasoning-plus achieved performance comparable to the full DeepSeek-R1 model—which has a staggering 671 billion parameters. That's achieving similar results with just 2% of the parameters!

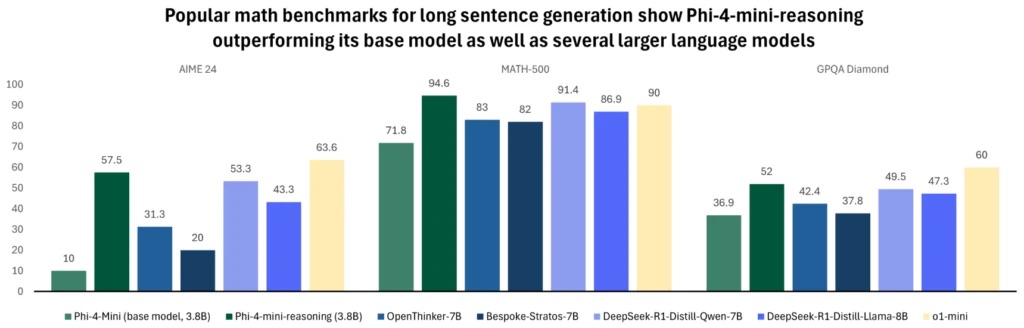

The Phi-4-mini-reasoning Model

For environments with even tighter compute constraints, Microsoft has also introduced Phi-4-mini-reasoning. This compact 3.8-billion parameter model is specifically optimized for mathematical reasoning in resource-constrained settings. Despite its small size, Phi-4-mini-reasoning:

- Outperforms models over twice its size on math benchmarks

- Provides high-quality, step-by-step problem solving

- Is ideal for educational applications and embedded tutoring

- Can be deployed on edge or mobile systems with limited resources

Fine-tuned with synthetic data generated by the DeepSeek-R1 model and trained on over one million diverse math problems spanning multiple difficulty levels, this tiny model represents an impressive achievement in AI efficiency.

Beyond Math: Surprising Improvements in General Capabilities

Perhaps most surprising is how these reasoning-focused improvements have generalized to broader capabilities. The models show significant improvements in:

- Long input context question answering (improved by 16 points over the base model)

- Instruction following (improved by 22 points)

- Coding abilities

- Knowledge and language understanding

- Safety detection for potentially harmful content

- General problem-solving skills

This transfer of reasoning abilities to general-purpose tasks suggests that reasoning may be a foundational meta-skill that improves AI performance across domains.

Real-World Applications: From Copilot+ PCs to Azure AI

Microsoft hasn't just created these models for research purposes—they're already being integrated into products and services. The Phi family has become an integral part of Copilot+ PCs with the NPU-optimized Phi Silica variant, designed to be preloaded in memory for blazing-fast responses and power-efficient operation. Some practical applications include:

- "Click to Do" functionality, providing intelligent text tools for any content on screen

- Developer APIs for easy integration into applications

- Productivity enhancements in applications like Outlook, offering Copilot summary features offline

- Future deployment on Copilot+ PC NPUs with low-bit optimizations

All models are also available for developers and researchers on Azure AI Foundry and Hugging Face.

The Technical Secret Sauce: Data Curation and Training Methodology

What makes these models truly remarkable isn't just their performance but how Microsoft achieved it. The approach emphasizes:

Meticulous data curation: Rather than simply increasing model size, Microsoft focused on carefully selecting and filtering prompts and responses to cover optimal difficulty levels and diversity.

Strategic data mixing: The training data combines STEM topics, coding problems, and safety-focused tasks in carefully balanced proportions.

Two-stage approach: Starting with supervised fine-tuning on high-quality data, followed by reinforcement learning on a smaller set of problems with verifiable solutions.

This approach aligns with the data-centric methods used in earlier Phi and Orca models, demonstrating that thoughtful data preparation can yield outsized results compared to simply scaling up model size.

The Reasoning Gap: Room for Improvement

Despite these impressive results, Microsoft's research reveals interesting insights about current limitations and opportunities:

- Performance gaps remain between typical and optimal generations, suggesting room for improvement in training and decoding methods

- There are domain-specific variations in improvement, with math and physics showing stronger gains than biology and chemistry

- Within mathematics, discrete mathematics shows relatively modest improvements compared to other areas

- Extensive parallelization at test time allows the models to surpass even their teacher models in some benchmarks

These findings highlight areas requiring attention for future improvements and point to promising research directions.

The Big Picture: What This Means for AI's Future

Microsoft's Phi-4 reasoning models represent a significant milestone in AI development—demonstrating that with careful data curation, innovative training methodologies, and targeted optimization, small language models can achieve capabilities previously thought to require massive computational resources. This development has several important implications:

- More efficient AI deployment could dramatically reduce energy consumption and computational costs

- Edge computing applications become more feasible with powerful models that can run on resource-constrained devices

- The democratization of advanced AI capabilities becomes possible as hardware requirements decrease

- Research focus may shift from simply scaling up models to more efficient training methodologies

As these models continue to evolve and find their way into more applications and devices, they may well redefine our expectations about what's possible with efficient AI. The future of AI isn't just about building bigger models—it's about building smarter ones. And with Phi-4, Microsoft is showing us exactly how that's done.

Phi open model family:

https://azure.microsoft.com/en-us/products/phiAzure AI Foundry:

https://ai.azure.com/explore/models/?selectedCollection=phiHugging Face:

https://huggingface.co/microsoft/Phi-4-mini-reasoningTechnical Report:

Phi-4-reasoning Technical ReportTechnical Report:

Phi-4-Mini-Reasoning: Exploring the Limits of Small Reasoning Language Models in MathRecent Posts