March 16 2025 19:22Google has unveiled Gemma 3, its newest family of open-source large language models (LLMs). Released under the permissive Apache 2.0 license, Gemma 3 represents a major leap forward in accessible AI, combining impressive capabilities with unprecedented efficiency. What makes Gemma 3 truly remarkable is that it can run on a single consumer-grade GPU - a feat that puts advanced AI within reach of individual developers, researchers, and smaller organizations who previously faced hardware barriers to AI innovation.

What is Gemma 3?

Gemma 3 is Google DeepMind's latest generation of open-source language models, designed to be lightweight yet powerful. The family includes two main variants: Gemma 3 8B (8 billion parameters) and Gemma 3 27B (27 billion parameters), both available in base and instruction-tuned versions.

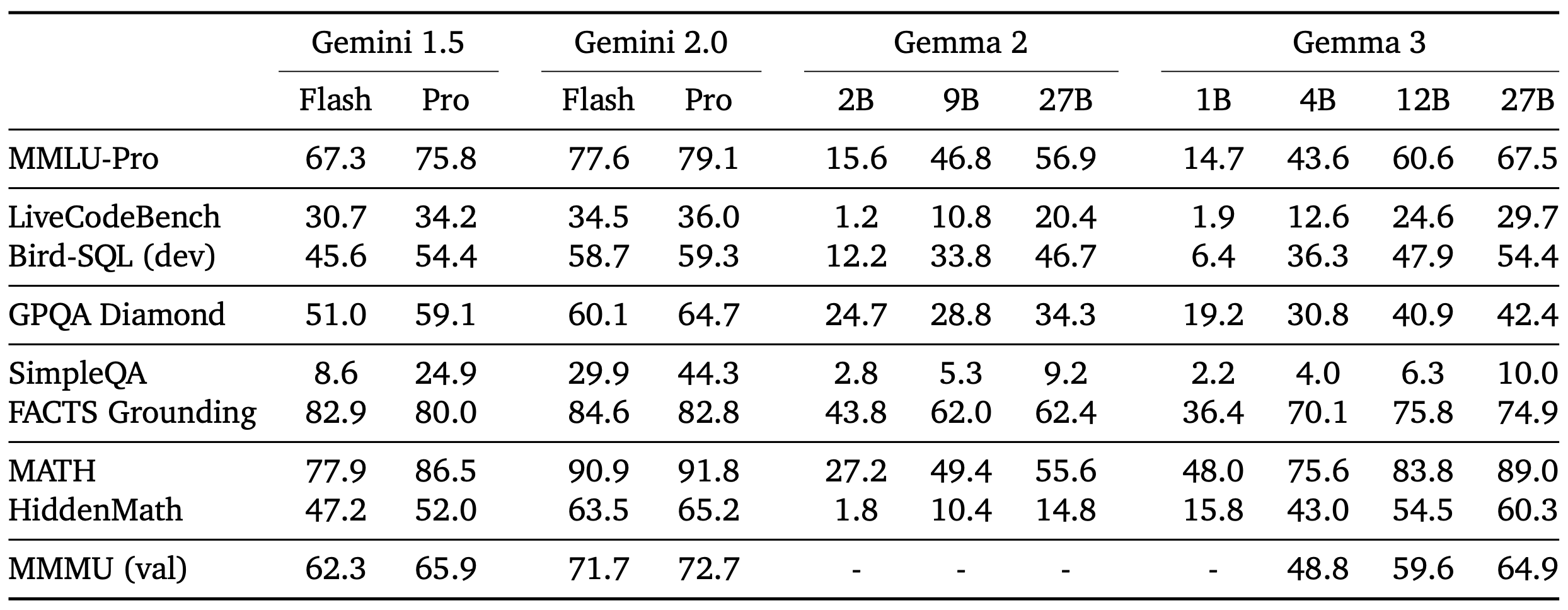

These models build on the foundation of their predecessor, Gemma 2, but incorporate significant architectural improvements and training innovations. Trained on a diverse corpus of text data, Gemma 3 models demonstrate remarkable capabilities across a wide range of natural language processing tasks - from content generation and summarization to reasoning and coding. Here is a comparison table of all the Gemma models released by Google:

What sets Gemma 3 apart from many other language models is its excellent performance-to-compute ratio. The models have been engineered with efficiency in mind, enabling them to run effectively on consumer-grade hardware while still delivering results that compete with much larger models requiring specialized infrastructure.

Key Features and Technical Specifications

Gemma 3 introduces several impressive technical advances that position it at the forefront of efficient AI models:

- Extended Context Window: Gemma 3 features a massive 128K token context window - approximately 100 pages of text - allowing it to process and maintain coherence across much longer conversations and documents than many competing models.

- Improved Architecture: The model incorporates improvements to the transformer architecture, including grouped-query attention (GQA) and attention sinks, which help enhance performance while maintaining computational efficiency.

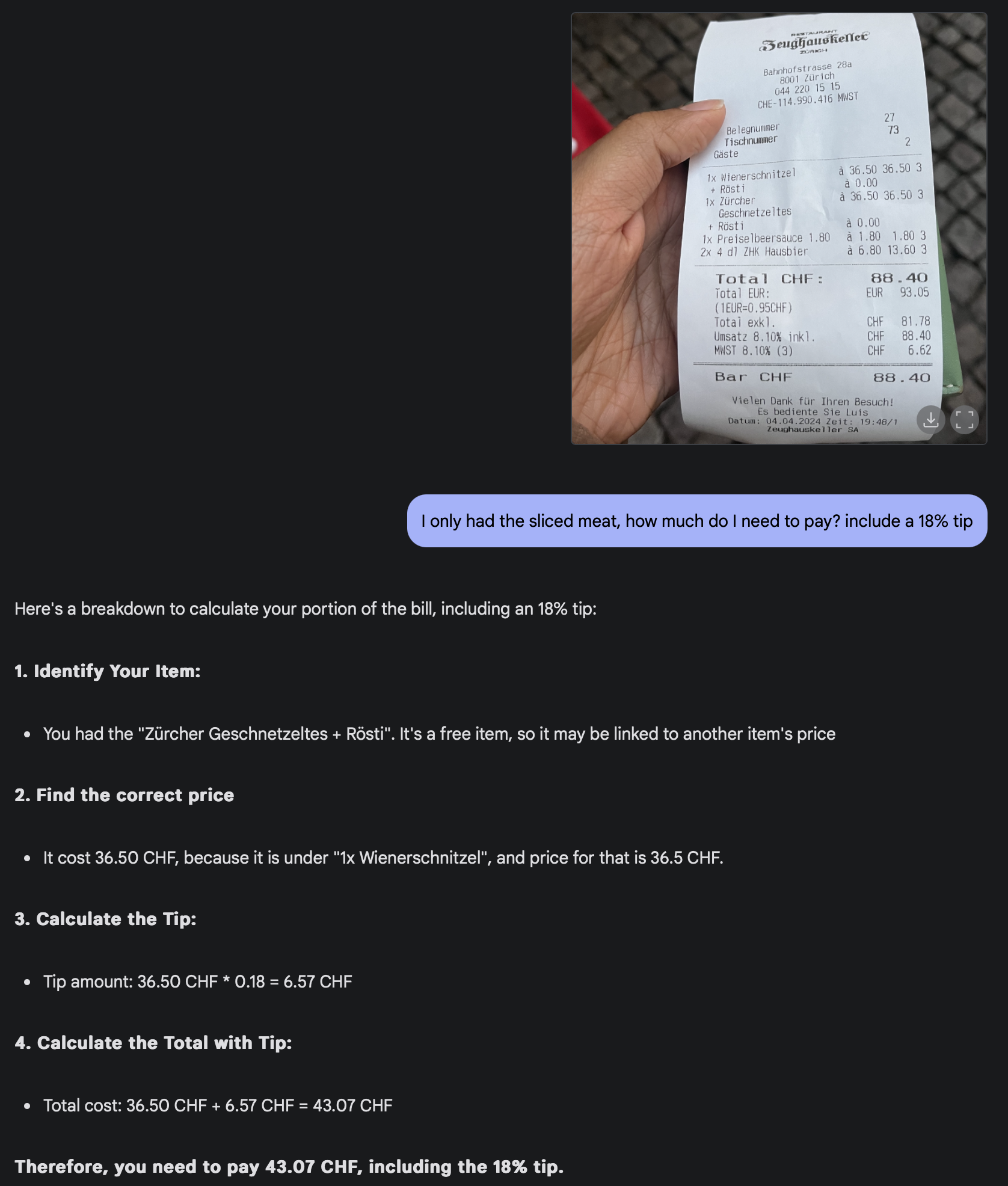

- Multi-Modal Capabilities: While primarily designed for text, Gemma 3 has basic multi-modal capabilities, with the ability to understand and generate text about images - although it's not yet on par with dedicated multi-modal models.

- KV Cache Optimizations: Technical improvements to the key-value cache implementation allow Gemma 3 to handle longer contexts more efficiently, reducing memory requirements.

- Instruction Tuning: The instruction-tuned variants have been optimized through supervised fine-tuning and direct preference optimization (DPO) to follow human instructions more effectively.

- Multilingual Support: Although primarily trained on English content, Gemma 3 shows decent capabilities in several other languages, particularly European languages.

Performance Benchmarks

Perhaps the most impressive aspect of Gemma 3 is how it stacks up against much larger models in benchmark testing. According to Google's technical report, Gemma 3 27B achieves:

- 98% of DeepSeek-Coder's accuracy on coding tasks, despite being smaller

- Superior performance to models with comparable parameter counts on reasoning benchmarks

- Strong results on MMLU (Massive Multitask Language Understanding), GSM8K (math problem solving), and HumanEval (code generation)

The Gemma 3 8B model outperforms most existing open-source models of similar size and even competes with some larger models. It shows particular strength in reasoning tasks and maintains good performance across various domains.

What's truly remarkable is that Gemma 3 27B can run on a single NVIDIA A100 GPU with 80GB of memory, while many competing models with similar capabilities require multiple GPUs or specialized hardware setups. The smaller 8B variant can even run on consumer-grade GPUs like the NVIDIA RTX 4090.

Single-GPU Operation: Why It Matters

The ability to run advanced AI models on a single GPU represents a significant democratization of access to artificial intelligence. Here's why this matters:

- Lower Cost Barrier: High-end AI development has traditionally required expensive clusters of GPUs or specialized hardware like Google's TPUs. Gemma 3's efficiency drastically reduces the financial barrier to entry.

- Wider Accessibility: Individual developers, academic researchers, and smaller companies can now work with state-of-the-art AI without massive infrastructure investments.

- Reduced Environmental Impact: AI model training and inference are notoriously energy-intensive. More efficient models mean less environmental impact from AI development and deployment.

- Edge AI Potential: While still requiring a substantial GPU for full performance, the efficiency gains point toward future possibilities for running powerful AI models closer to end users, rather than exclusively in cloud data centers.

- Democratized Innovation: When advanced AI tools become more accessible, innovation can come from more diverse sources, potentially leading to applications and improvements that might not emerge in a more restricted ecosystem.

Open Source Philosophy and Responsible AI

Google's decision to release Gemma 3 as a fully open-source model under the Apache 2.0 license reflects a commitment to transparent and collaborative AI development. This approach stands in contrast to some other leading AI models that remain closed-source or have more restrictive licensing terms. The open-source nature of Gemma 3 allows researchers and developers to:

- Inspect the model's architecture and training methodology

- Adapt and fine-tune the model for specific applications

- Contribute improvements back to the community

- Build upon the technology for new innovations

Google has also emphasized responsible AI development with Gemma 3, including:

- Comprehensive safety evaluations and red-teaming before release

- Publication of a detailed system card documenting the model's capabilities and limitations

- Responsible use guidelines and best practices

- Safety tuning to reduce harmful outputs

This combination of openness and responsibility aims to foster innovation while mitigating potential risks associated with advanced AI technologies.

Practical Applications

The efficiency and capabilities of Gemma 3 open up numerous practical applications across various domains:

- Software Development: Gemma 3's strong coding abilities make it valuable for code generation, debugging assistance, and explaining complex programming concepts.

- Content Creation: The model can assist with writing, editing, and creative content generation, helping creators overcome blocks and refine their work.

- Education: Teachers and students can use Gemma 3 for personalized tutoring, explanation of complex concepts, and assistance with research.

Research Assistants: Researchers can employ the model to summarize papers, generate hypotheses, and explore connections between different fields. - Customer Service: The instruction-tuned variants can power chatbots and customer service applications that understand and respond to natural language queries.

- Data Analysis: Gemma 3 can help extract insights from text data, summarize findings, and generate reports based on unstructured information.

Small Business Applications: The accessibility of Gemma 3 means small businesses can implement AI solutions that were previously only available to larger enterprises.

Here is another example of visual interaction with Gemma 3 27B IT model highlighted in the Gemma 3 technical report:

Limitations and Challenges

Despite its impressive capabilities, Gemma 3 does have limitations that potential users should be aware of:

- Knowledge Cutoff: Like all pre-trained models, Gemma 3's knowledge has a cutoff date, after which it doesn't have information about world events or technological developments.

- Hallucinations: As with other LLMs, Gemma 3 can sometimes generate plausible-sounding but factually incorrect information, especially when asked about obscure topics.

- Language Limitations: While it has some multilingual capabilities, Gemma 3's performance is strongest in English, with varying levels of effectiveness in other languages.

- Resource Requirements: Though more efficient than many alternatives, Gemma 3 still requires substantial computational resources, especially the 27B model.

- Specialized Domain Knowledge: The model may have limitations when dealing with highly specialized or technical domains that were underrepresented in its training data.

- Bias Concerns: Like all models trained on internet data, Gemma 3 may reflect and potentially amplify biases present in its training corpus, though Google has implemented measures to mitigate this.

How Gemma 3 Compares to Other AI Models

To understand Gemma 3's position in the AI ecosystem, it's helpful to compare it with other prominent models:

- GPT-4 and Claude 3: While proprietary models like GPT-4 and Claude 3 still maintain an edge in overall capabilities, especially in complex reasoning tasks, Gemma 3 narrows the gap significantly while offering complete open-source access.

- Llama 3: Meta's Llama 3 is perhaps Gemma 3's closest competitor in the open-source space. Gemma 3 appears to edge out Llama 3 in several benchmarks, particularly in reasoning and coding tasks, though both families offer strong performance.

- Mistral AI models: Gemma 3 competes favorably with Mistral's offerings, with the 27B model outperforming Mistral's similarly sized models on most benchmarks.

- Original Gemma: Compared to the first Gemma release, Gemma 3 represents a substantial improvement across all metrics, with better reasoning, longer context handling, and more reliable instruction following.

- Specialized Models: While general-purpose models like DeepSeek-Coder or MathGPT may still have advantages in their specific domains, Gemma 3's versatility combined with strong domain performance makes it a compelling alternative.

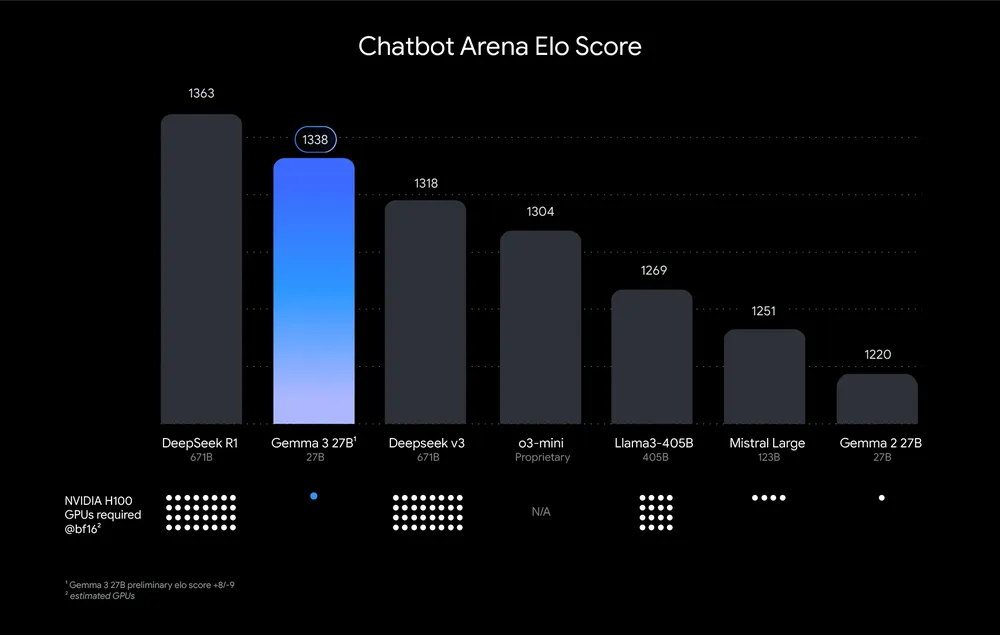

Here is the full evaluation of Gemma 3 27B IT model in the Chatbot Arena, with all the models evaluated against each other through blind side-by-side evaluations by human raters.

Future Implications

The release of Gemma 3 has several important implications for the future of AI development:

- Accelerated Innovation Cycle: The accessibility of powerful open-source models is likely to accelerate the pace of innovation in AI, as more developers can build upon and improve existing technologies.

- Efficiency Arms Race: Gemma 3's impressive efficiency could spark increased focus on optimizing AI models for better performance-to-compute ratios throughout the industry.

- Specialized Adaptations: The open-source nature of Gemma 3 will encourage developers to create specialized adaptations for specific industries and applications.

- Ethical AI Development: Google's emphasis on responsible AI with Gemma 3 may establish new norms for transparent and ethical development practices in the AI community.

- Edge AI Advancement: The efficiency gains demonstrated by Gemma 3 represent a step toward more powerful AI capabilities on edge devices.

- Democratized AI Access: By reducing hardware requirements for advanced AI, Gemma 3 contributes to a more inclusive AI ecosystem where innovations can come from anywhere.

Google's Gemma 3 represents a significant milestone in the development of accessible, efficient AI. By delivering performance that rivals much larger models while running on a single GPU, Gemma 3 makes advanced AI capabilities accessible to a much broader audience of developers, researchers, and organizations. The open-source nature of the model, combined with Google's emphasis on responsible AI development, creates an opportunity for collaborative innovation that balances technological advancement with ethical considerations.

Recent Posts